AI-Powered Fake Accounts Surge in 2026: A US Threat

AI-Powered Fake Accounts Surge in 2026: A US Threat

In early 2026, social media platforms are battling a massive surge in AI-generated fake accounts, with millions being created and deployed daily. Advanced generative AI tools enable scammers, propagandists, and automated networks to produce hyper-realistic profiles—complete with convincing bios, AI-generated photos, natural-sounding posts, and coordinated engagement—at unprecedented scale.

This explosion threatens online trust, fuels scams, spreads misinformation, and manipulates public opinion. For everyday Americans—whether scrolling Facebook, posting on X (formerly Twitter), networking on LinkedIn, or chatting on WhatsApp—the risks include financial fraud, identity theft, election interference, and eroded community trust.

The Massive Scale of AI Fake Accounts in 2026

The numbers are staggering and growing rapidly:

- Platforms like Meta (Facebook, Instagram) removed hundreds of millions of fake accounts quarterly in 2025, with AI-driven detection catching most proactively—yet estimates suggest 4% or more of active users (over 140 million across Meta apps) could still be fake or bot-operated.

- On X, bot activity remains high, with some analyses estimating 20–64% of accounts potentially automated or inauthentic, especially in high-engagement topics like politics, crypto, or news.

- Broader studies show bots generating 20–50% of chatter in global events, with spikes during elections or crises—often using AI for realistic, multilingual content.

- Cybersecurity forecasts warn that AI scams and fake profiles will nearly double in 2026, with tools enabling mass creation of synthetic identities that mimic real people (full histories, photos, connections).

AI lowers the barrier: One operator can spin up thousands of profiles in hours using tools like Grok, Midjourney, or open-source LLMs—making coordinated campaigns easier and harder to detect.

Why AI Makes Fake Accounts Far More Dangerous

Unlike old-school bots (repetitive, obvious spam), modern AI fakes are sophisticated:

- Realistic Appearance: AI-generated photos, consistent posting styles, and contextual replies that blend seamlessly with human content.

- Personalization & Scale: LLMs craft tailored messages in English (or other languages), exploiting cultural references, current events, or user data scraped from profiles.

- Coordinated Harm: Networks amplify scams (crypto fraud, investment schemes), deepfakes, misinformation, or harassment—reaching millions quickly.

- Evolving Tactics: Bots now use star-like interaction structures (many connecting to few hubs), while humans show hierarchical patterns—making detection trickier.

For Americans, this means higher exposure to:

- Financial Scams: Fake investment ads, romance fraud, or “family emergency” messages tricking people into sending money.

- Misinformation & Polarization: AI bots spreading false election claims, health myths, or divisive narratives—especially potent in politically charged years.

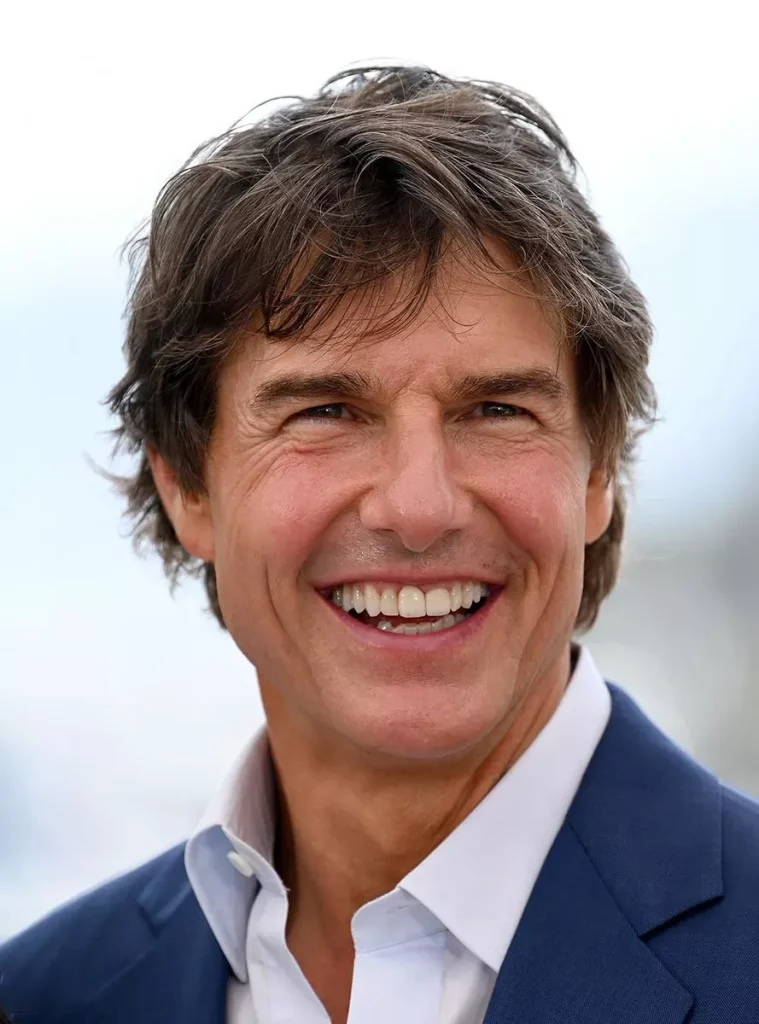

- Deepfakes & Impersonation: Realistic videos/audio of politicians, celebrities, or loved ones used for fraud or harassment.

- Everyday Risks: Phishing links in DMs, fake job offers on LinkedIn, or spam overwhelming feeds.

Experts warn 2026 could be a “tipping point” as AI agents and tools make fraud more scalable and convincing.

Platform Responses: Progress Amid Challenges

- Meta: Proactively removes 99%+ of fakes before reports; adds “Made with AI” labels and behavioral detection. Still, billions of actions needed quarterly.

- X: Increased verification, CAPTCHA, and API restrictions on spammy apps; revoked access for some AI-slop crypto projects posting mass low-quality content.

- LinkedIn & Others: Stronger identity checks and anomaly detection to curb fake professional profiles.

- Regulatory Push: Calls for stricter rules on AI labeling, coordinated inauthentic behavior bans, and transparency—though enforcement lags.

The arms race continues: Platforms improve detection, but AI evolves faster.

How Americans Can Protect Themselves in 2026

- Verify Suspicious Contacts: Before engaging, confirm via voice/video call or known channels—especially “emergency” messages or investment pitches.

- Spot Red Flags: New accounts with AI-like photos (check reverse image search), generic bios, sudden high activity, or repetitive patterns.

- Tighten Privacy: Limit who can message/tag you; review followers and block/report fakes aggressively.

- Enable Security Features: Use 2FA/MFA everywhere; avoid clicking unknown links in DMs or comments.

- Be Skeptical of Content: Cross-check viral claims with reliable sources; watch for deepfake signs (unnatural lip sync, lighting inconsistencies).

- Report Proactively: Use platform tools to flag bots/spam—helps train AI detectors.

- Educate & Stay Informed: Share tips with family/friends; monitor updates from FTC, FBI, or cybersecurity firms on emerging threats.

AI fake accounts aren’t just a tech problem—they erode the authenticity of online spaces Americans use daily. Vigilance, verification, and platform accountability are key to fighting back.

ClickUSANews.com covers cybersecurity threats, social media trends, and digital safety for Americans. Stay informed—follow for updates on AI risks, scam alerts, and protection guides.

In early 2026, social media platforms are battling a massive surge in AI-generated fake accounts, with millions being created and deployed daily. Advanced generative AI tools enable scammers, propagandists, and automated networks to produce hyper-realistic profiles—complete with convincing bios, AI-generated photos, natural-sounding posts, and coordinated engagement—at unprecedented scale.

This explosion threatens online trust, fuels scams, spreads misinformation, and manipulates public opinion. For everyday Americans—whether scrolling Facebook, posting on X (formerly Twitter), networking on LinkedIn, or chatting on WhatsApp—the risks include financial fraud, identity theft, election interference, and eroded community trust.

The Massive Scale of AI Fake Accounts in 2026

The numbers are staggering and growing rapidly:

- Platforms like Meta (Facebook, Instagram) removed hundreds of millions of fake accounts quarterly in 2025, with AI-driven detection catching most proactively—yet estimates suggest 4% or more of active users (over 140 million across Meta apps) could still be fake or bot-operated.

- On X, bot activity remains high, with some analyses estimating 20–64% of accounts potentially automated or inauthentic, especially in high-engagement topics like politics, crypto, or news.

- Broader studies show bots generating 20–50% of chatter in global events, with spikes during elections or crises—often using AI for realistic, multilingual content.

- Cybersecurity forecasts warn that AI scams and fake profiles will nearly double in 2026, with tools enabling mass creation of synthetic identities that mimic real people (full histories, photos, connections).

AI lowers the barrier: One operator can spin up thousands of profiles in hours using tools like Grok, Midjourney, or open-source LLMs—making coordinated campaigns easier and harder to detect.

Why AI Makes Fake Accounts Far More Dangerous

Unlike old-school bots (repetitive, obvious spam), modern AI fakes are sophisticated:

- Realistic Appearance: AI-generated photos, consistent posting styles, and contextual replies that blend seamlessly with human content.

- Personalization & Scale: LLMs craft tailored messages in English (or other languages), exploiting cultural references, current events, or user data scraped from profiles.

- Coordinated Harm: Networks amplify scams (crypto fraud, investment schemes), deepfakes, misinformation, or harassment—reaching millions quickly.

- Evolving Tactics: Bots now use star-like interaction structures (many connecting to few hubs), while humans show hierarchical patterns—making detection trickier.

For Americans, this means higher exposure to:

- Financial Scams: Fake investment ads, romance fraud, or “family emergency” messages tricking people into sending money.

- Misinformation & Polarization: AI bots spreading false election claims, health myths, or divisive narratives—especially potent in politically charged years.

- Deepfakes & Impersonation: Realistic videos/audio of politicians, celebrities, or loved ones used for fraud or harassment.

- Everyday Risks: Phishing links in DMs, fake job offers on LinkedIn, or spam overwhelming feeds.

Experts warn 2026 could be a “tipping point” as AI agents and tools make fraud more scalable and convincing.

Platform Responses: Progress Amid Challenges

- Meta: Proactively removes 99%+ of fakes before reports; adds “Made with AI” labels and behavioral detection. Still, billions of actions needed quarterly.

- X: Increased verification, CAPTCHA, and API restrictions on spammy apps; revoked access for some AI-slop crypto projects posting mass low-quality content.

- LinkedIn & Others: Stronger identity checks and anomaly detection to curb fake professional profiles.

- Regulatory Push: Calls for stricter rules on AI labeling, coordinated inauthentic behavior bans, and transparency—though enforcement lags.

The arms race continues: Platforms improve detection, but AI evolves faster.

How Americans Can Protect Themselves in 2026

- Verify Suspicious Contacts: Before engaging, confirm via voice/video call or known channels—especially “emergency” messages or investment pitches.

- Spot Red Flags: New accounts with AI-like photos (check reverse image search), generic bios, sudden high activity, or repetitive patterns.

- Tighten Privacy: Limit who can message/tag you; review followers and block/report fakes aggressively.

- Enable Security Features: Use 2FA/MFA everywhere; avoid clicking unknown links in DMs or comments.

- Be Skeptical of Content: Cross-check viral claims with reliable sources; watch for deepfake signs (unnatural lip sync, lighting inconsistencies).

- Report Proactively: Use platform tools to flag bots/spam—helps train AI detectors.

- Educate & Stay Informed: Share tips with family/friends; monitor updates from FTC, FBI, or cybersecurity firms on emerging threats.

AI fake accounts aren’t just a tech problem—they erode the authenticity of online spaces Americans use daily. Vigilance, verification, and platform accountability are key to fighting back.

ClickUSANews.com covers cybersecurity threats, social media trends, and digital safety for Americans. Stay informed—follow for updates on AI risks, scam alerts, and protection guides.

For more USA news check:

https://clickusanews.com/news/

Latest USA breaking news, national headlines, global affairs, and trending stories.

https://clickusanews.com/sports/

USA sports news, live scores, match highlights, athlete updates, and major sporting events.

https://clickusanews.com/technology/

Technology news covering AI, gadgets, innovation, cybersecurity, and digital trends in the USA.

https://clickusanews.com/entertainment-movies-ott/

Entertainment updates including movies, OTT releases, celebrity news, and pop culture stories.

https://clickusanews.com/business/

Business and finance news with USA market updates, corporate stories, crypto, and economic insights.